Product development

Develop a tool that helps students choose classes based on their career interest

📌 PROJECT SCOPE

Client: UT Austin, School of Information

Timeframe: 8 weeks

My Role: UX Researcher (Contract)

Team: Mia Eltiste (UXR), Metinee Ding (UXD)

Faculty advisor: Eric Nordquist

Supervisor: Dean Soo Young RiehMethods: Generative research, Competitive analysis, Database SQL coding, RITE Method testing lo-fi prototype

Tools: Figma, SublimeText, Zoom

Project Overview

🚀 CLIENT KICKOFF

Dean of Education at UT Austin’s School of Information, Soo Young Rieh, wanted a tool that helped students figure out which could be the best classes for them to take based on their career interests.

🔎 OBJECTIVES

Improve the experience of current MSIS students planning their courses

Recommend courses based on students’ selected career interests

Highlight potential classes without creating a prescriptive pathway

✏️ NOTES

This project was intended to have a developer working with us but was not recruited in time. Because of this, a lot of my work focused on database needs and is outside of the traditional UX Researcher role.

Methodology

Competitive Analysis

Survey Design + Analysis

Ideation

User Testing

Hi-fi Designs

Database Normalization

🏅 Competitive Analysis

Our team looked at direct competitors, similar programs at other universities, as well as indirect competitors like Apple Music, Mario Kart, and others for how we choose different types of content.

Competitive analysis findings

The competitive analysis showed other universities had some sort of solution for the confusion individuals have coming into the program, but only the Stanford d school addressed a similar problem for admitted students.

Must-haves

Tailor recommendations to user’s needs

Multiple option selection for areas of interest and career paths

The ability to go back and change something

Accessible without a UT log-in

Nice-to-haves

Imagery that describes each option accurately

Ability to indicate range of preference

Use previous courses taken and make recommendations based on this

Progress indicators

📮 Survey

The project started off with surveying current students and alumni of the MSIS program with total respondents at n=122.

Survey Objectives

How do iSchool students categorize themselves (UX, Archives, Librarianship, etc.)?

How do iSchool students categorize themselves within their field (UX Researcher, UX Designer, etc.)?

What were the most valuable courses students took?

What courses do students recommend consistently?

(For Alum only) What skills are needed in their role and were these taught in the iSchool curriculum?

Card Sorting

We asked everyone to self-identify their specialty before giving them categories to choose from to verify which areas of study need to be included

We identified four main fields:

User Experience & HCI

Archives and Records Management

Library Science

Data Science, Machine Learning, AI

Recommended Courses

There was only a significant amount of respondents from the UX/HCI field to get any meaningful data for the course offerings.

I used the UX/HCI responses to sort through how the database would need to classify classes and apply the structure to all fields.

Responses were grouped into three categories:

Class ranking: the most useful classes

Class order: the ideal course sequence

Industry skills: the essential skills each role needs

In Miro, I color-coded each class a UX student put in the ideal curriculum. From this, I was able to see the differences between UX researchers and designers, or alumni and current students from a visual perspective. This helped me see the patterns that emerged without being tied into the details.

Ex. A lot of light blue appeared in the first semester and it’s voted one of the most important classes. The red class also shows up a lot, but mainly for UX designers. It’s also voted most important frequently but gets placed more sporadically throughout the semester. This signals the red class may not need to be taken in a sequence and maybe more helpful for designers.

Survey findings

The first two semesters are more standardized for what students recommended

Career interest impacted the usefulness of certain courses

More than just usefulness of a course dictated when it should be taken in the curriculum

Survey Implications

The recommender tool needs to be able to suggest courses to be taken early on in a student’s program

Some course recommendations depend on the student’s past and skill level so the tool would ideally take these into consideration

The first group of courses suggested can be determined by the field of interest, but eventually, the area of study or career interest will need to be taken into account in order to make helpful recommendations

🖊️ Lo-fi Designs

Metinee Ding, the UX designer developed lo-fi designs of the recommender tool informed by the competitive analysis and survey results.

I provided some general thoughts and feedback on lo-fi designs before we used them in RITE testing. Other than that, the lo-fi designs were completely done by my teammate, Metinee Ding.

👥 User Testing

We interviewed 5 UX students so we could limit the prototype’s information to UX classes. We expected the way individuals interact with the tool would be the same and the findings would be universal in all areas of study.

💡 UX tip: RITE testing refers to Rapid Iterative Testing and Evaluation which is a way to get some user testing in while on a tight timeline. Use this technique when you are able to make changes quickly in between testing (every 2-3 users).

It was clear after 3 users that the current design was not working.

Individuals rarely scrolled down to even discover the schedule

Users had a lot of difficulty discerning which classes were being recommended

Some of the language we used like “elective” and “foundational” were problematic because people would bring in assumptions about what the word meant

Other insights:

The four-semester schedule was not inclusive for non-traditional students

Drag & drop capability was not a clear affordance of the UI

Once we removed the idea of a schedule way to present the information, we were able to focus simply on information architecture and Metinee iterated on the designs (shown in the prototype section). This likely will also make the tool more accessible, as drag and drop solutions can often be difficult to make accessible.

RITE findings

The information architecture was clearly the biggest issue and it prevented individuals from understanding which courses were recommended and which were chosen

Must-haves

Classes tagged by field, area of study, and/or career interest

Recommended classes for specializations offered at the iSchool

Level of courses (to update recommendations based on how far along a student is in the program)

Nice-to-haves

How frequently a class is offered and when it was last offered

Classes tagged by skills taught (soft skills, hard skills, industry skills, etc.)

A quicker way to understand course content besides syllabus

📊 Database

💡 Note: This is outside the scope of a UX researcher. There was no developer or database manager on our team, so I stepped in and used my introductory SQL knowledge to sort through the needs of the database before finalizing our design.

Because this would be a large project for the iSchool to undertake, I broke up the database needs into three stages:

Class listings table (+ existing attributes moved to new database)

Add new class attributes (for the recommender to use)

Robust database (nice-to-have features + feedback loop to keep recommendations relevant)

I also created the tables needed for the new database and entered sample data to show the relationship between the tables.

Stage 1: Normalized database

The biggest issue with the current database was it was not normalized. Classes were listed by the UT catalog’s requirements, which allows different classes to have the same class number. So, this made it impossible to assign class topics or any other attributes we’d want to add for the recommender tool.

I went ahead and created the tables needed for the new database, but the most important being the new “Table 3” that gave a primary key to each individual course.

I manually entered in example classes to show the relationships between the tables. However, to do this with all the courses would be tedious and once a developer is hired, can be automated with a script. They will be able to use this document to see how and why I set things up a particular way, and then decide if that’s the best choice moving forward.

Stage 2: New class attributes

Once the database is normalized, each class will be able to have its own “information” associated with it. You could add endless amounts of information to describe classes, but with the survey results and user testing responses, I determined the most important ones to start with.

Students were on a journey from exploration to differentiation, and where they were in this journey would affect which courses should be recommended.

Course Level - An Archives student in their last semester may find an introductory UX course helpful, but a UX student would not

Course Type - Some courses were more broad (theoretical) and others taught hard skills

Courses themselves would not be labeled an “essential” course for everyone but would change based on each student’s needs.

Unique Rules - In order to create a successful recommendation system, the database would need to work alongside our quiz in order to produce unique conditions for when classes will be recommended (e.g. the capstone course will not be recommended to a student beginning the program, even though it is a core class).

Stage 2 would be where the recommender tool started taking the conditional rules and applying them to change how a class was defined.

In the SQL file I put extensive notes on any of the rules I had found or thought would be a good way to sort through classes. However, since I knew this would be better informed by a trained individual, I left these as suggestions and directed they work with subject matter experts to determine these needs.

Stage 3: Robust Database

The final stage of the database would be set up to incorporate all the information we hoped the tool would be able to provide students. It still will work without it, but these things were commonly brought up in the survey and user interviews.

Tags such as “portfolio piece”

Specific skills or topics covered in the class

Deliverables (projects, paper, etc.)

This area is the least fleshed out as it depends on data we didn’t have available and because implementation will be dictated by the developer.

We also noted that it would be important to have a continuous feedback loop from individuals (students and teachers) so the recommender can receive the most relevant information.

Overall Database Needs

During all stages, an admin side or the ability for instructors to update their information should be kept in mind and developed alongside the actual database.

Stage 1

Normalized database implementing new tables documented in SQL document

Move existing attributes to the new table of classes

Instructors assigned field/area of study and career attributes to act as proxy while classes get updated

Stage 2

Course levels (Introductory, Advanced, etc.)

Course type (Core, Breadth, etc.)

Build out rules for recommendations

Stage 3

“Nice-to-have” details (e.g. portfolio flag or skills taught) for each class

Feedback loop to keep recommendations updated and relevant to evolving industry needs and student’s feedback

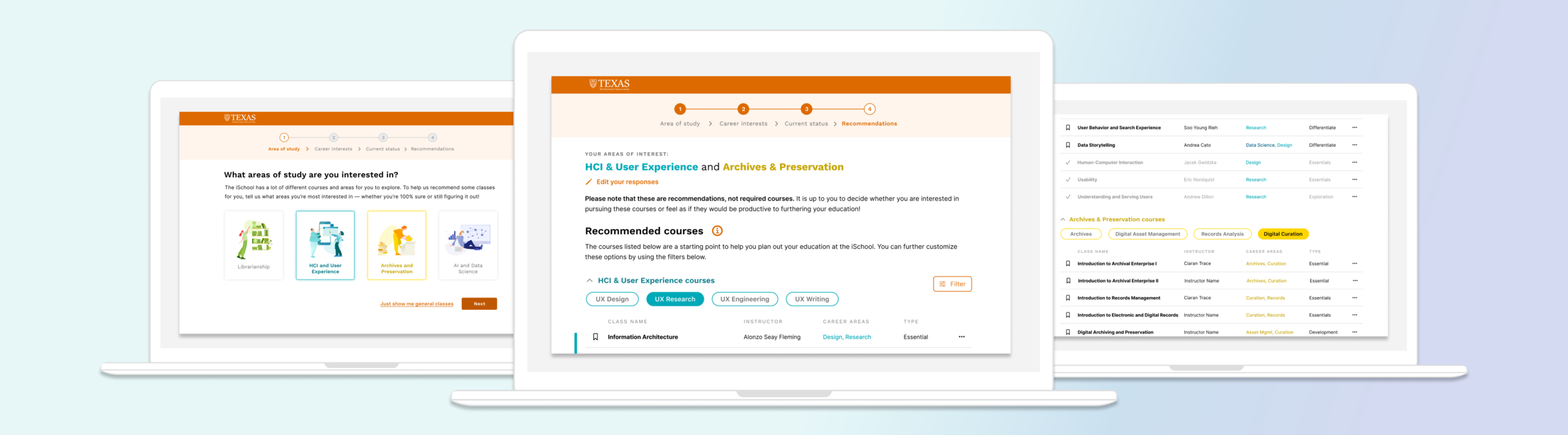

📱 Prototype (& final presentation)

Quiz Questions

There is the option to take a “quiz” or skip to get general recommendations and start interacting with the tool.

The quiz asks individuals:

Area of study (multi-select): Libraries, HCI/User Experience, Archives and Preservation, AI and Data Science

Career interests (sorted by the area of study)

Student status (and courses taken if a current student)

Quiz Answers

The quiz results will change which classes get recommended. The student’s experience level and career interests will be taken into account, and then relevant classes will be shown in a curated list.

Individuals will be categorized into 4 stages (which were named to reflect the types of classes an individual is interested in taking):

Exploration

Essentials

Development

Differentiate

The quiz results will be the main way to classify students. We can determine the stage based on the following:

What type of experience do they have?

The database considers: Course credits

A robust system could change recommendations based on previous classes taken or even the student’s background or work experience.

What type of future career do they envision?

The database considers: Field of interest, Area of study, Career interest

The recommendations should change based on a student’s stage. However, these are very nuanced rules and contextual, so it was out of our scope to determine those rules.

I did provide some general guidance and extensive notes on any “proposed rules” in my database document for the future developer to review.

Quiz Results

Students can edit their responses at any time.

Recommended courses will be sorted into four fields & one general category:

User Experience & HCI

Archives and Records Management

Library Science

Data Science, Machine Learning, AI

Generalized courses

Users can filter courses by many features:

Career interests (UX Design vs. UX Research)

Course type (Essential, Development, etc.)

Instructors, semesters offered

Saved Courses

Current and returning students can log in with their UT id and have saved information:

Previously taken courses pre-entered

Saved or bookmarked courses

Tracking number of credits taken

💭 Reflection

Industry Takeaways

This was an extreme case, but I dropped almost all UX researcher responsibilities halfway through and focused solely on the database. My background in math, my startup, and relevant coursework allowed me to step into the semi-technical role.

Personal Takeaways

This was the first time I was able to apply my database management knowledge to a project and it was really exciting. It may inspire me to learn a little more down the road, but for now, I know enough to be able to talk with technical roles and figure out how that will impact the designs and recommendations I make.